BGP Support for Scaling Virtual Services

Overview

One of the ways Avi Vantage adds load balancing capacity for a virtual service is to place the virtual service on additional Service Engines (SEs). For instance, capacity can be added for a virtual service when needed by scaling out the virtual service on to additional SEs within the SE group, then removing (scaling in) the additional SEs when no longer needed. In this case, the primary SE for the virtual service coordinates distribution of the virtual service traffic among the other SEs, while also continuing to process some of the virtual service’s traffic.

An alternative method for scaling a virtual service is to use a Border Gateway Protocol (BGP) feature, route health injection (RHI), along with a layer 3 routing feature, equal cost multi-path (ECMP). Using Route Health Injection (RHI) with ECMP for virtual service scaling avoids the managerial overhead placed upon the primary SE to coordinate the scaled out traffic among the SEs.

BGP is supported in legacy (active/standby) as well as elastic (active/active and N+M) high availability modes.

If a virtual service is marked down by its health monitor or for any other reason, the Avi SE withdraws the route advertisement to its virtual IP (VIP) and restores the same only when the virtual service is marked up again.

Notes on Limits

Service Engine Count

By default, Avi Vantage supports a maximum of four SEs per virtual service, and this can be increased to a maximum of 64 SEs. Each SE uses RHI to advertise a /32 host route to the virtual service’s VIP address, and is able to accept the traffic. The upstream router uses ECMP to select a path to one of the SEs.

The limit on SE count is imposed by the ECMP support on the upstream router. If the router supports up to 64 equal cost routes, then a virtual service enabled for RHI can be supported on up to 64 SEs. Likewise, if the router supports a lesser number of paths, then the virtual service count enabled for RHI will be lower.

Subnets and Peers

Avi Vantage supports 4 distinct subnets with any number of peers in those 4 subnets. Consequently, a VIP can be advertised on more than 4 peers as long as those peers belong to 4 or less subnets. To illustrate:

- A VIP can be advertised to 8 peers, all belonging to single subnet.

- A VIP can be advertised to 4 pairs of peers (once again, 8 peers), with each pair belonging to separate subnet.

Supported Ecosystem

BGP-based scaling is supported on the following.

- VMware

- Linux server (bare-metal) cloud

- OpenShift and Kubernetes

Note: Peering with OpenStack routers is not supported. However, peering with an external router is possible.

BGP-based Scaling

NSX Advanced Load Balancer supports use of the following routing features to dynamically perform virtual service load balancing and scaling.

- Route health injection (RHI): RHI allows traffic to reach a VIP that is not in the same subnet as its SE. The Service Engine (SE) where a virtual service is located advertises a host route to the VIP for that virtual service, with the SE’s IP address as the next-hop router address. Based on this update, the BGP peer connected to the SE updates its route table to use the SE as the next hop for reaching the VIP. The peer BGP router also advertises itself to its upstream BGP peers as a next hop for reaching the VIP.

- Equal cost multi-path (ECMP): Higher bandwidth for the VIP is provided by load sharing its traffic across multiple physical links to the SE(s). If the SE has multiple links to the BGP peer, the SE advertises the VIP host route on each of those links. The BGP peer router sees multiple next-hop paths to the virtual service’s VIP, and uses ECMP to balance traffic across the paths. If the virtual service is scaled out to multiple SEs, each SE advertises the VIP, on each of its links to the peer BGP router.

When a virtual service enabled for BGP is placed on its SE, that SE establishes a BGP peer session with each of its next-hop BGP peer routers. The SE then performs RHI for the virtual service’s VIP, by advertising a host route (/32 network mask) to the VIP. The SE sends the advertisement as a BGP route update to each of its BGP peers. When a BGP peer receives this update from the SE, the peer updates its own route table with a route to the VIP that uses the SE as the next hop. Typically, the BGP peer also advertises the VIP route to its other BGP peers.

The BGP peer IP addresses, as well as the local Autonomous System (AS) number and a few other settings, are specified in a BGP profile on the Controller. RHI support is disabled (default) or enabled within the individual virtual service’s configuration. If the SE has more than one link to the same BGP peer, this also enables ECMP support for the VIP. The SE advertises a separate host route to the VIP on each of the SE interfaces with the BGP peer.

If the SE fails, the BGP peers withdraw the routes that were advertised to them by the SE.

BGP Profile Modifications

In NSX Advanced Load Balancer BGP peer changes are handled gracefully as explained below.

- If a new peer is added to the BGP profile, the virtual service IP is advertised to the new BGP peer router without needing to disable/enable the virtual service.

- If a BGP peer is deleted from the BGP profile, any virtual service IPs that had been advertised to the BGP peer will be withdrawn.

- When a BGP peer IP is updated, it is handled as an add/ delete of the BGP peer.

BGP Upstream Router Configuration

The BGP control plane can hog the CPU on the router in case of scale setups. Changes to CoPP policy are needed to have more BGP packets on the router, or this can lead to BGP packets getting dropped on the router when churn happens.

Note: The ECMP route group or ECMP next-hop group on the router could exhaust if the unique SE BGP next-hops advertised for different set of virtual service VIPs. When such exhaustion happens, the routers could fall back to a single SE next-hop causing traffic issues.

Example:

The following is the sample config on a Dell S4048 switch for adding 5k network entries and 20k paths:

w1g27-avi-s4048-1#show ip protocol-queue-mapping

Protocol Src-Port Dst-Port TcpFlag Queue EgPort Rate (kbps)

-------- -------- -------- ------- ----- ------ -----------

TCP (BGP) any/179 179/any _ Q9 _ 10000

UDP (DHCP) 67/68 68/67 _ Q10 _ _

UDP (DHCP-R) 67 67 _ Q10 _ _

TCP (FTP) any 21 _ Q6 _ _

ICMP any any _ Q6 _ _

IGMP any any _ Q11 _ _

TCP (MSDP) any/639 639/any _ Q11 _ _

UDP (NTP) any 123 _ Q6 _ _

OSPF any any _ Q9 _ _

PIM any any _ Q11 _ _

UDP (RIP) any 520 _ Q9 _ _

TCP (SSH) any 22 _ Q6 _ _

TCP (TELNET) any 23 _ Q6 _ _

VRRP any any _ Q10 _ _

MCAST any any _ Q2 _ _

w1g27-avi-s4048-1#show cpu-queue rate cp

Service-Queue Rate (PPS) Burst (Packets)

-------------- ----------- ----------

Q0 600 512

Q1 1000 50

Q2 300 50

Q3 1300 50

Q4 2000 50

Q5 400 50

Q6 400 50

Q7 400 50

Q8 600 50

Q9 30000 40000

Q10 600 50

Q11 300 50

SE-Router Link Types Supported with BGP

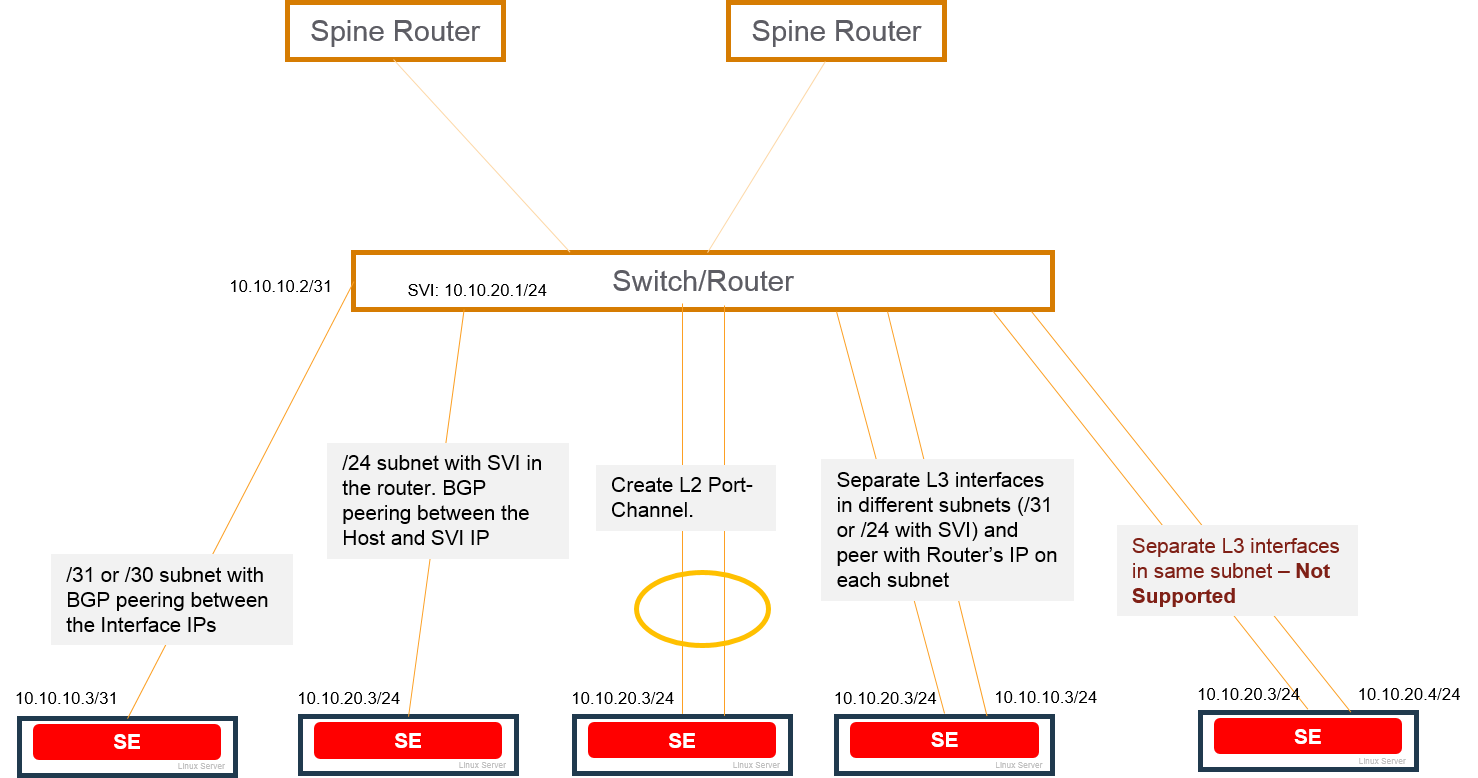

The following figure shows the types of links that are supported between NSX Advanced Load Balancer and BGP peer routers.

BGP is supported over the following types of links between the BGP peer and the SEs.

- Host route (/30 or /31 mask length) to the VIP, with the SE as the next hop.

- Network route (/24 mask length) subnet with Switched Virtual Interface (SVI) configured in the router.

- Layer 2 port channel (separate physical links configured as a single logical link on the next-hop switch or router).

- Multiple layer 3 interfaces, in separate subnets (/31 or /24 with SVI). Separate BGP peer session is set up between each SE layer 3 interface and the BGP peer.

Each SE can have multiple BGP peers. For example, an SE with interfaces in separate layer 3 subnets can have a peer session with a different BGP peer on each interface. Connection between the SE and the BGP peer on separate Layer 3 interfaces that are in the same subnet and same VLAN is not supported.

Using multiple links to the BGP peer provides higher throughput for the VIP. The virtual service also can be scaled out for higher throughput. In either case, a separate host route to the VIP is advertised over each link to the BGP peer, with the SE as the next hop address.

Note: This feature is supported for IPv6 in NSX Advanced Load Balancer.

To make debugging easier, some BGP commands can be viewed from the Controller shell. Refer to the BGP Visibility

Optional BGP Route Withdrawal when virtual service Goes Down

If virtual service advertising VIPs via BGP goes down, its VIPs are removed from BGP, and hence it becomes unreachable. The optional BGP route withdrawal when virtual service goes down feature is added.

The following are the features added:

- Field

VirtualService advertise_down_vs -

Configuration

-

To turn on the feature, you can configure as follows:

[admin:amit-ctrl-bgp]: virtualservice> advertise_down_vs [admin:amit-ctrl-bgp]: virtualservice> save -

To turn off the feature, you can configure as follows:

[admin:amit-ctrl-bgp]: virtualservice> no advertise_down_vs [admin:amit-ctrl-bgp]: virtualservice>save

-

Notes:

-

If virtual service is already down, the configuration changes done will not affect it. These changes will be applied if virtual service goes down in future. In such cases, you should disable and then enable virtual service and apply configuration.

remove_listening_port_on_vs_downfeature will not work ifadvertise_down_vsis False. -

For custom actions, such as HTTP redirect, showing error pages, etc., to handle down virtual service,

VirtualService.remove_listening_port_on_vs_downshould be False.

Use Case for adding the same BGP peer to the different VRFs

You can add a block preventing from:

-

Adding a BGP peer which belongs to a network with a different VRF than the VRF that you are adding the peer to

-

Changing network VRF if network is being used in BGP profile

The output of show show serviceengine backend_tp_segrp0-se-zcztm vnicdb:

| vnic[3] | |

| if_name | avi_eth5 |

| linux_name | eth3 |

| mac_address | 00:50:56:86:0f:c8 |

| pci_id | 0000:0b:00.0 |

| mtu | 1500 |

| dhcp_enabled | True |

| enabled | True |

| connected | True |

| network_uuid | dvportgroup-2404-cloud-d992824d-d055-4051-94f8-5abe4a323231 |

| nw[1] | |

| ip | fe80::250:56ff:fe86:fc8/64 |

| mode | DHCP |

| nw[2] | |

| ip | 10.160.4.16/24 |

| mode | DHCP |

| is_mgmt | False |

| is_complete | True |

| avi_internal_network | False |

| enabled_flag | False |

| running_flag | True |

| pushed_to_dataplane | True |

| consumed_by_dataplane | True |

| pushed_to_controller | True |

| can_se_dp_takeover | True |

| vrf_ref | T-0-default |

| vrf_id | 2 |

| ip6_autocfg_enabled | False

11:46

| vnic[7] | |

| if_name | avi_eth6 |

| linux_name | eth4 |

| mac_address | 00:50:56:86:12:0e |

| pci_id | 0000:0c:00.0 |

| mtu | 1500 |

| dhcp_enabled | True |

| enabled | True |

| connected | True |

| network_uuid | dvportgroup-69-cloud-d992824d-d055-4051-94f8-5abe4a323231 |

| nw[1] | |

| ip | 10.160.4.21/24 |

| mode | DHCP |

| nw[2] | |

| ip | 172.16.1.90/32 |

| mode | VIP |

| ref_cnt | 1 |

| nw[3] | |

| ip | fe80::250:56ff:fe86:120e/64 |

| mode | DHCP |

| is_mgmt | False |

| is_complete | True |

| avi_internal_network | False |

| enabled_flag | False |

| running_flag | True |

| pushed_to_dataplane | True |

| consumed_by_dataplane | True |

| pushed_to_controller | True |

| can_se_dp_takeover | True |

| vrf_ref | T-0-default |

| vrf_id | 2 |

| ip6_autocfg_enabled | False |

[T-0:tp_bm-ctlr1]: > show vrfcontext

+-------------+-------------------------------------------------+

| Name | UUID |

+-------------+-------------------------------------------------+

| global | vrfcontext-0287e5ea-a731-4064-a333-a27122d2683a |

| management | vrfcontext-c3be6b14-d51d-45fc-816f-73e26897ce84 |

| management | vrfcontext-1253beae-4a29-4488-80d4-65a732d42bb4 |

| global | vrfcontext-e2fb3cae-f4a6-48d5-85be-cb06293608d6 |

| T-0-default | vrfcontext-1de964c7-3b6b-4561-9005-8f537db496ea |

| T-0-VRF | vrfcontext-04bb20ef-1cbc-498b-b5ce-2abf68bae321 |

| T-1-default | vrfcontext-9bea0022-0c15-44ea-8813-cfd93f559261 |

| T-1-VRF | vrfcontext-18821ea1-e1c7-4333-a72b-598c54c584d5 |

+-------------+-------------------------------------------------+

[T-0:tp_bm-ctlr1]: > show vrfcontext T-0-default

+----------------------------+-------------------------------------------------+

| Field | Value |

+----------------------------+-------------------------------------------------+

| uuid | vrfcontext-1de964c7-3b6b-4561-9005-8f537db496ea |

| name | T-0-default |

| bgp_profile | |

| local_as | 65000 |

| ibgp | True |

| peers[1] | |

| remote_as | 65000 |

| peer_ip | 10.160.4.1 |

| subnet | 10.160.4.0/24 |

| md5_secret | |

| bfd | True |

| network_ref | PG-4 |

| advertise_vip | True |

| advertise_snat_ip | False |

| advertisement_interval | 5 |

| connect_timer | 10 |

| ebgp_multihop | 0 |

| shutdown | False |

| peers[2] | |

| remote_as | 65000 |

| peer_ip | 10.160.2.1 |

| subnet | 10.160.2.0/24 |

| md5_secret | |

| bfd | True |

| network_ref | PG-2 |

| advertise_vip | False |

| advertise_snat_ip | True |

| advertisement_interval | 5 |

| connect_timer | 10 |

| ebgp_multihop | 0 |

| shutdown | False |

| keepalive_interval | 60 |

| hold_time | 180 |

| send_community | True |

| shutdown | False |

| system_default | False |

| lldp_enable | True |

| tenant_ref | admin |

| cloud_ref | backend_vcenter |

+----------------------------+-------------------------------------------------+

Notes:

-

The tenant (tenant VRF enabled) specific SE is configured with a PG-4 interface in VRF context (T-0-default) which belongs to tenant and not the actual VRF context (global) in which the PG-4 is configured.

-

From placement perspective, if you initiate an add vNIC for an Service Engine for a virtual service, the vNIC’s VRF will always be the VRF of the virtual service. This change will block you from adding a BGP peer to a

vrfcontext, if the BGP peer belongs to a network which has a differentvrfcontext. The change is necessary as this configuration can cause traffic to be dropped. -

Since there is no particular use case for having a VRF-A with BGP peers which belong to networks in VRF-B, you will not be allowed to make any configuration changes.

-

Additionally, if you want to change an existing network’s VRF, and there are BGP peers in that network’s VRF which belong to this network, the change will be blocked.

Bidirectional Forwarding Detection (BFD)

BFD is supported for fast detection of failed links. BFD enables networking peers on each end of a link to quickly detect and recover from a link failure. Typically, BFD detects and repairs a broken link faster than by waiting for BGP to detect the down link.

For instance, if the SE fails, BFD on the BGP peer router can quickly detect and correct the link failure.

Note: Starting with NSX Advanced Load Balancer release 21.1.2, BFD feature supports BGP multi-hop implementation.

Scaling

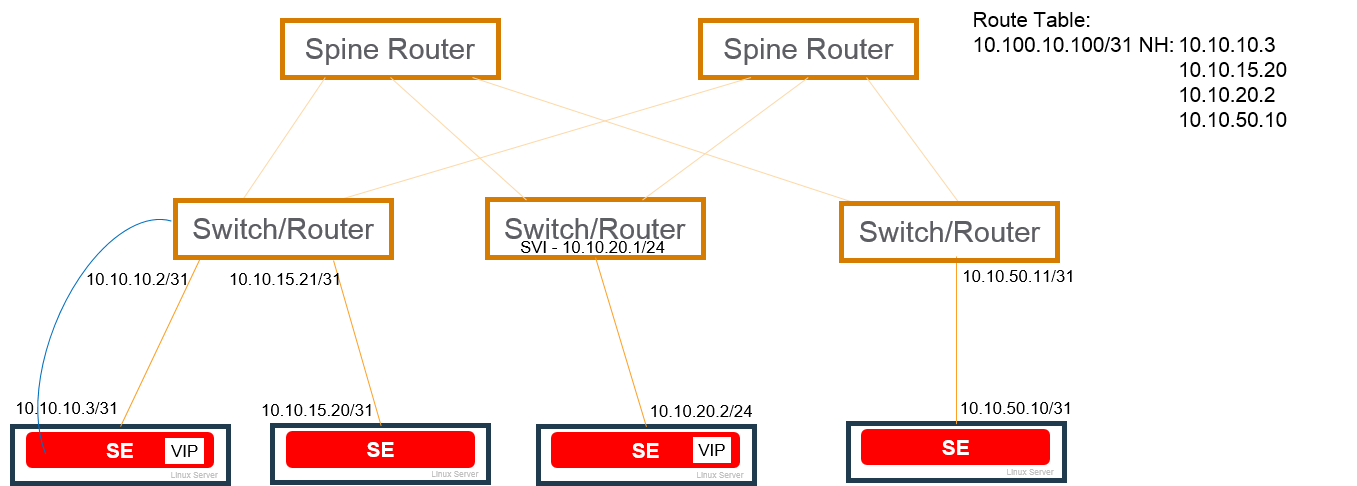

Scaling out/in of virtual services is supported. In this example, a virtual service placed on the SE on the 10.10.10.x network is scaled out to 3 additional SEs.

Flow Resiliency During Scale Out/In

A flow is a 5-tuple: src-IP, src-port, dst-IP, dst-port, and protocol. Routers do a hash of the 5-tuple to pick which equal cost path to use. When an SE scale out occurs, the router is given yet another path to use, and its hashing algorithm may make different choices, thus disrupting existing flows. To gracefully cope with this BGP-based scale-out issue, NSX Advanced Load Balancer supports resilient flow handling using IP-in-IP (IPIP) tunneling. The following sequence shows how this is done.

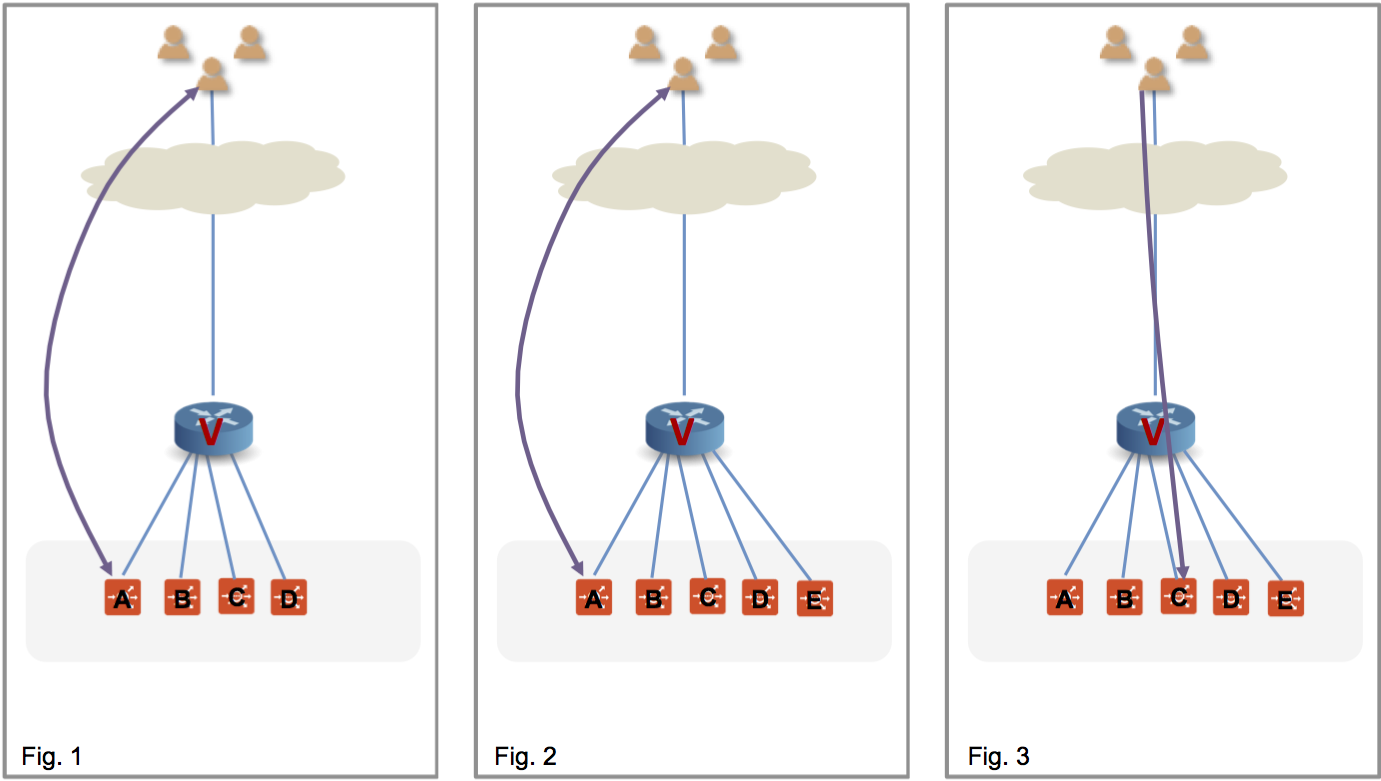

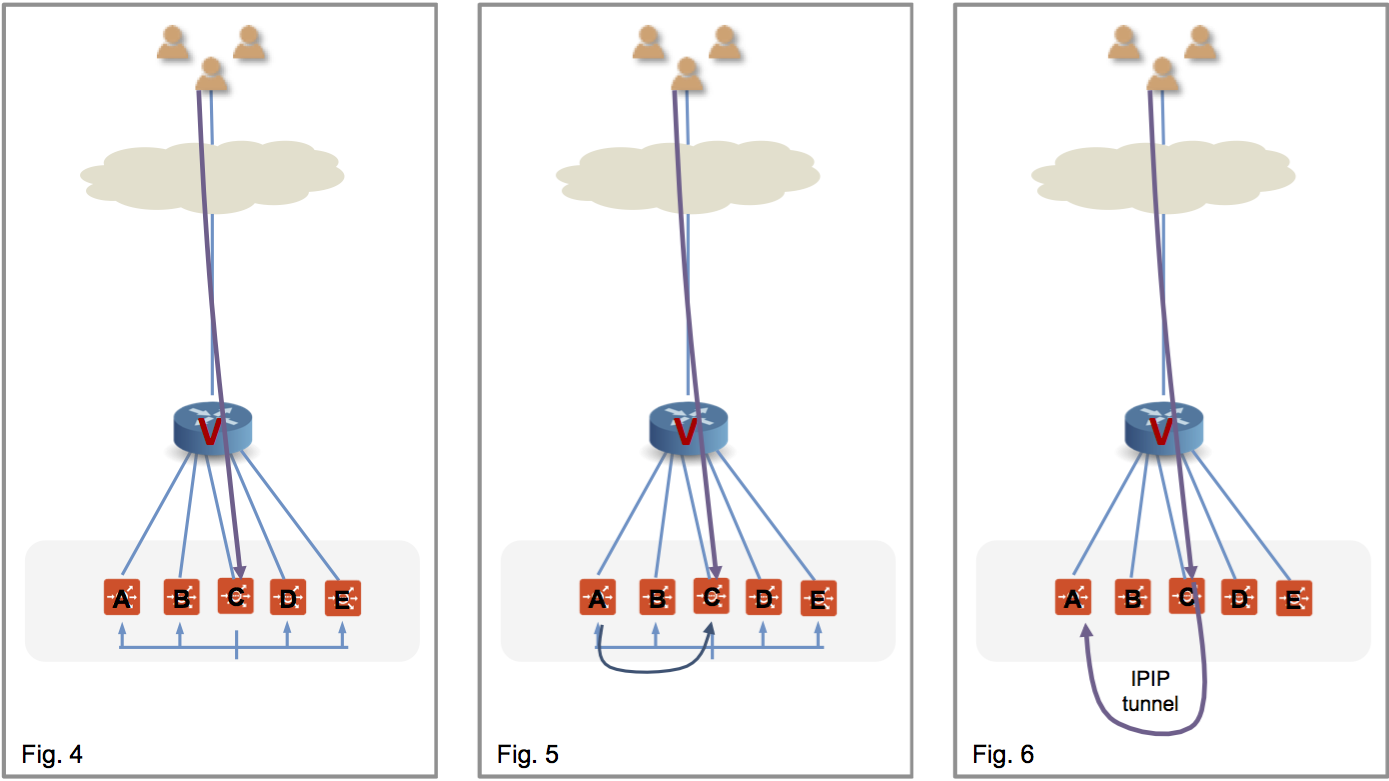

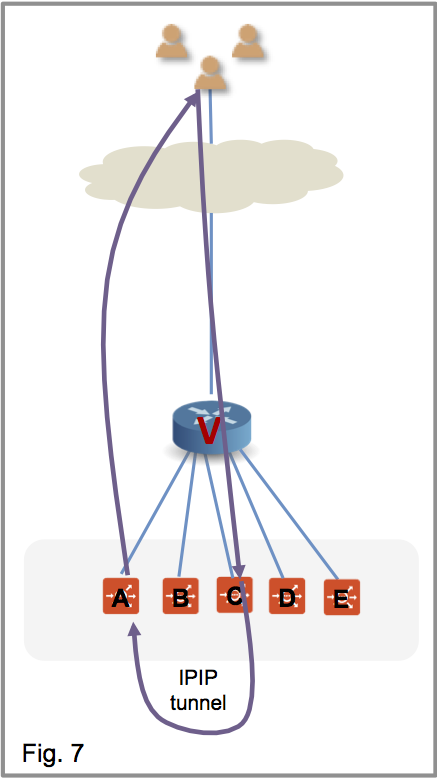

Figure 1 shows the virtual service placed on four SEs, with a flow ongoing between a client and SE-A. In figure 2, there is a scale out to SE-E. This changes the hash on the router. Existing flows get rehashed to other SEs. In this particular example, suppose it is SE-C.

In the NSX Advanced Load Balancer implementation SE-C sends a flow probe to all other SEs (figure 4). Figure 5 shows SE-A responding to claim ownership of the depicted flow. In figure 6, SE-C uses IPIP tunneling to send all packets of this flow to SE-A.

In figure 7 SE-A continues to process the flow and sends its response directly to the client.

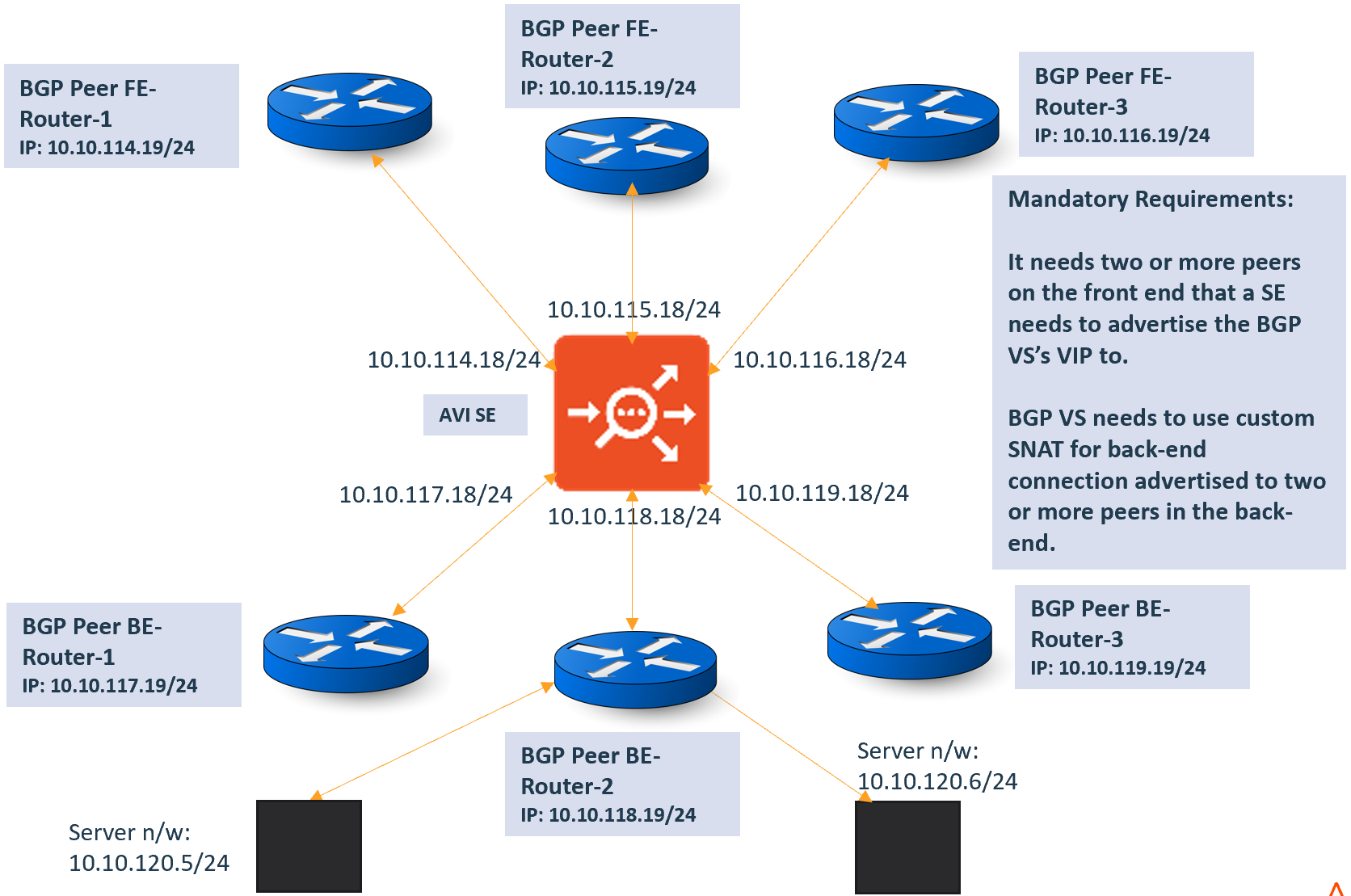

Flow Resiliency for Multi-homed BGP Virtual Service

The flow resiliency is supported when there is a BGP virtual service that is configured to advertise its VIP to more than one peer in front-end as well is configured to advertise SNAT IP associated to virtual service to more than one peers in back-end.

In such a setup, when one of the links goes down, the BGP withdraws the routes from that particular NIC causing rehashing of that flow to another interface on the same SE or to another SE. The new SE that receives the flow tries to recover the flow with a flow probe which fails because of the interface going down.

The problem is seen with both the front-end and back-end flows.

For the front-end flows to be recovered, the flows must belong to a BGP virtual service that is placed on more than one NIC on a Service Engine.

For the back-end flows to be recovered, the virtual service must be configured with SNAT IPs and must be advertised via BGP to multiple peers in the backend.

Recovering Frontend Flows

Flow recovery within the same SE

If the interface goes down, the FT entries are not deleted. If the flow lands on another interface, the flow-probe is triggered which is going to migrate the flow from the old flow table to the new interface where the flow is landed.

The interface down event is reported to the Controller and the Controller removes the VIP placement from the interface. This causes the primary virtual service entry to be reset. If the same flow now lands on a new interface, it triggers a flow-probe, flow-migration as long as the virtual service was placed initially on more than one interfaces.

Flow recovery on a scaled-out SE

If the flow lands on a new SE, the remote flow-probes are triggered. A new flag called relay will be added to the flow-probe message. This flag indicates that all the receiving interfaces need to relay the flow-probes to other flow-tables where the flow maybe there. The flag is set at the sender of the flow-probe when the virtual service is detected as BGP scaled-out virtual service.

On the receiving SE, the messages are relayed to the other flow tables resulting in a flow-migration. So subsequent flow-probe from the new SE is going to earn a response because the flow now resides on the interface that is up and running.

If there are more than one interfaces on the flow-probe receiving SE, they will all trigger a flow-migrate.

Recovering Backend Flows

The backend flows can be migrated only if the SNAT IP is used for the backend connection. When multiple BGP peers are configured on the backend, and the servers are reachable via more than one routes, SNAT IP is placed on all the interfaces. Also the flow table entries are created on all the interfaces in the backend.

This results in the flow getting recovered in case an interface fails and the flow lands on another interface with flow table entry.

Message Digest5 (MD5) Authentication

BGP supports authentication mechanism using the Message Digest 5 (MD5) algorithm. When authentication is enabled, any TCP segment belonging to BGP exchanged between the peers, is verified and accepted only if authentication is successful. For authentication to be successful, both the peers must be configured with the same password. If authentication fails, BGP peer session will not be established. BGP authentication can be very useful because it makes it difficult for any malicious user to disrupt network routing tables.

Enabling MD5 Authentication for BGP

To enable MD5 authentication, specify md5_secret in the respective BGP peer configuration. MD5 support is extended to OpenShift cloud where the Service Engine runs as docker container but peers with other routers masquerading as host.

Mesos Support

BGP is supported for north-south interfaces in Mesos deployments. The SE container that is handling the virtual service will establish a BGP peer session with the BGP router configured in the BGP peering profile for the cloud. The SE then injects a /64 route (host route) to the VIP, by advertising the /64 to the BGP peer.

The following requirements apply to the BGP peer router.

- The BGP peer must allow the SE’s IP interfaces and subnets in its BGP neighbor configuration. The SE will initiate the peer connection with the BGP router.

- For eBGP, the peer router will see the TTL value decremented for the BGP session. This could prevent the session from coming up. This issue can be prevented from occuring by setting the eBGP multi-hop time-to-live (TTL). For example, on Juniper routers, the eBGP multi-hop TTL must be set to 64.

To enable MD5 authentication, specify md5_secret in the respective BGP peer configuration. MD5 support is extended to OpenShift cloud where the Service Engine runs as docker container but peers with other routers masquerading as host.

Enabling BGP Features in NSX Advanced Load Balancer

Configuration of BGP features in NSX Advanced Load Balancer is accomplished by configuring a BGP profile, and by enabling RHI in the virtual service’s configuration.

-

Configure a BGP profile. The BGP profile specifies the local Autonomous System (AS) ID that the SE and each of the peer BGP routers is in, and the IP address of each peer BGP router.

-

Enable Advertise VIP using BGP option on the Advanced tab of the virtual service’s configuration. This option advertises a host route to the VIP address, with the SE as the next hop.

Note: When BGP is configured on global VRF on LSC in-band, BGP configuration is applied on SE only when a virtual service is configured on the SE. Till then peering between SE and peer router will not happen.

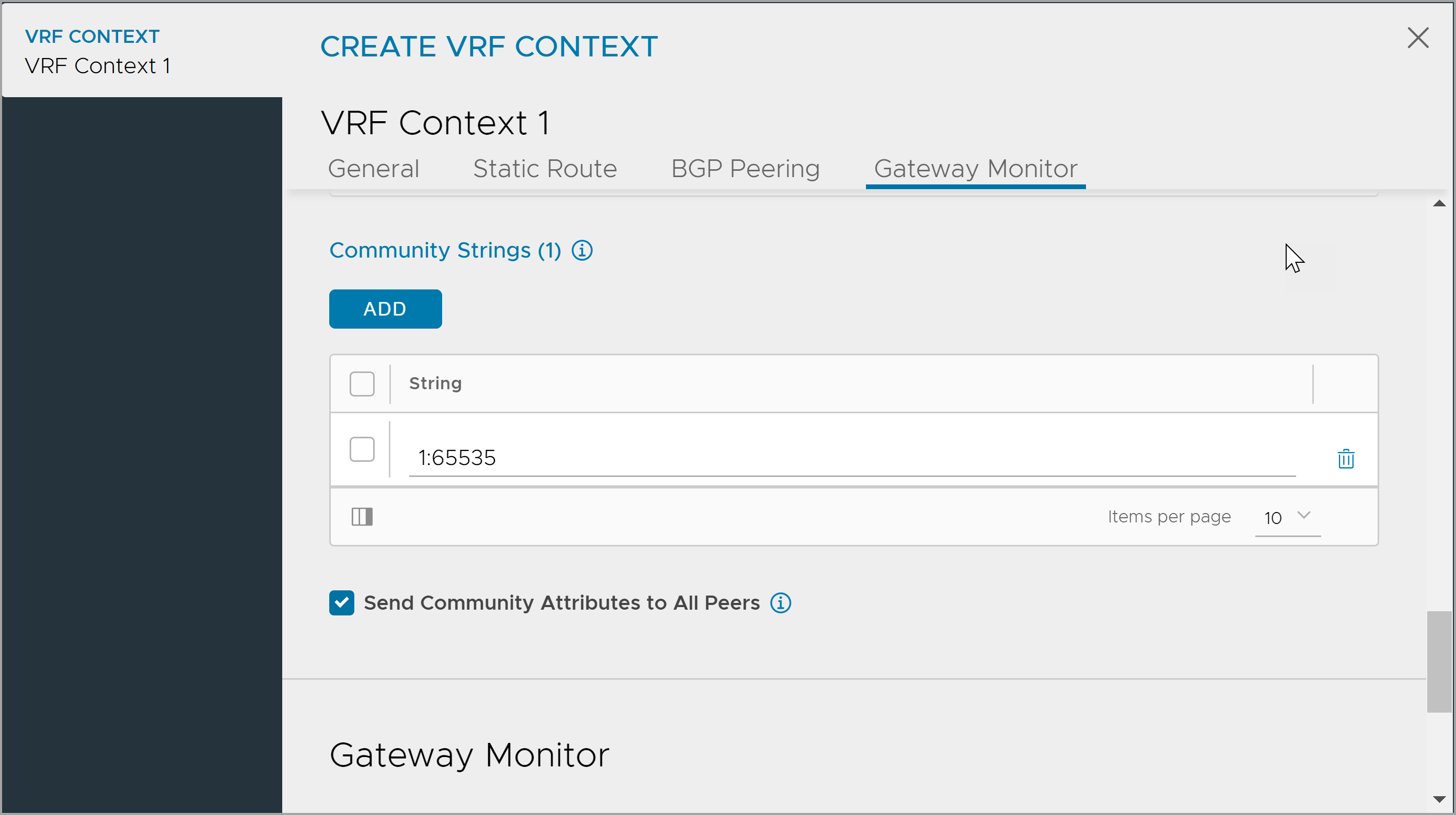

Using NSX Advanced Load Balancer UI

To configure a BGP profile via the web interface,

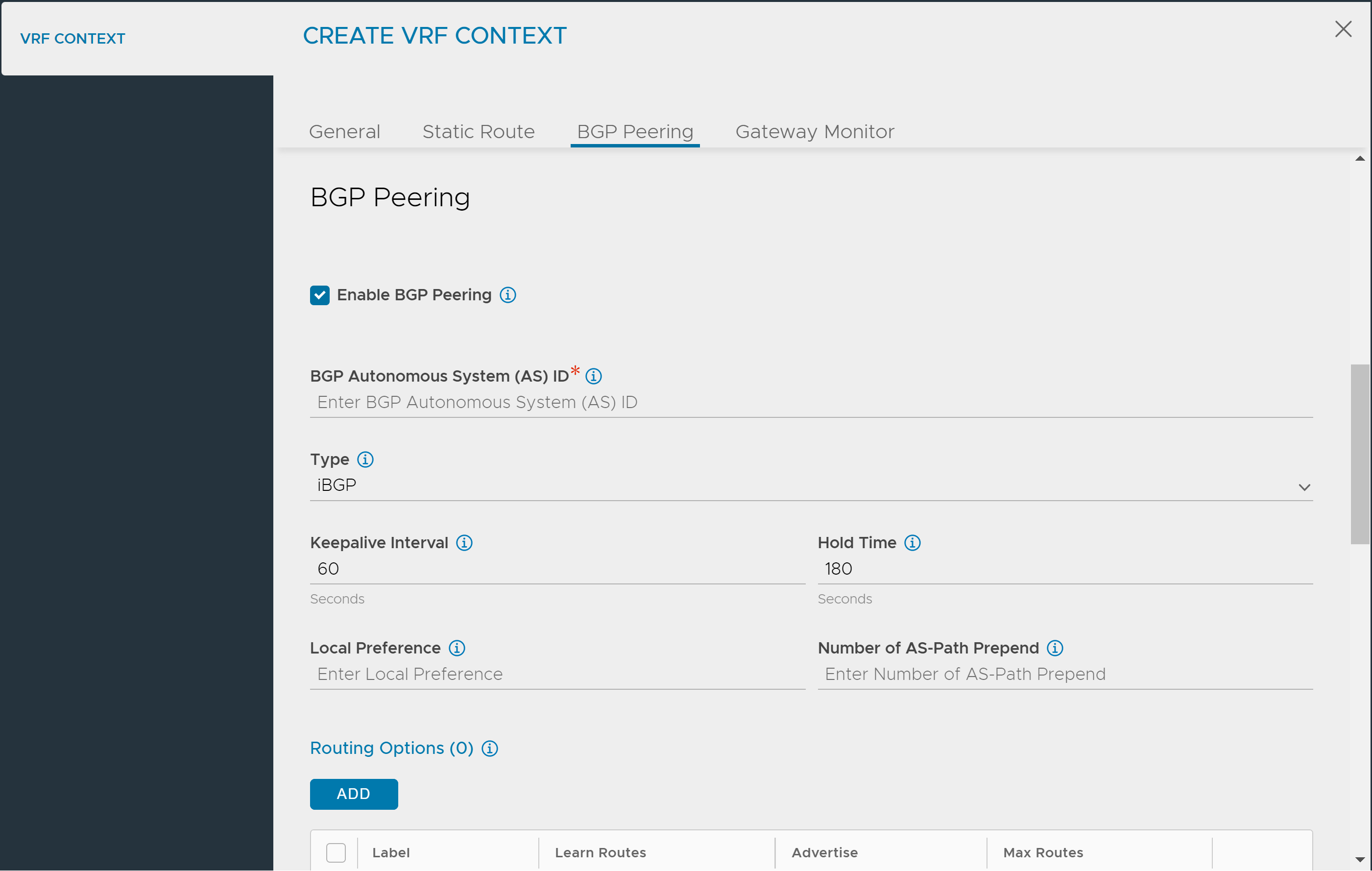

- Navigate to Infrastructure > Cloud Resources > VRF Context.

- Select the Cloud.

- Select a VRF Context.

- Click on the BGP Peering tab, and then check Enable BGP Peering box.

- Enter the following information.

- BGP Autonomous System (AS) ID: a value between 1 and 4294967295

- BGP type: iBGP or eBGP

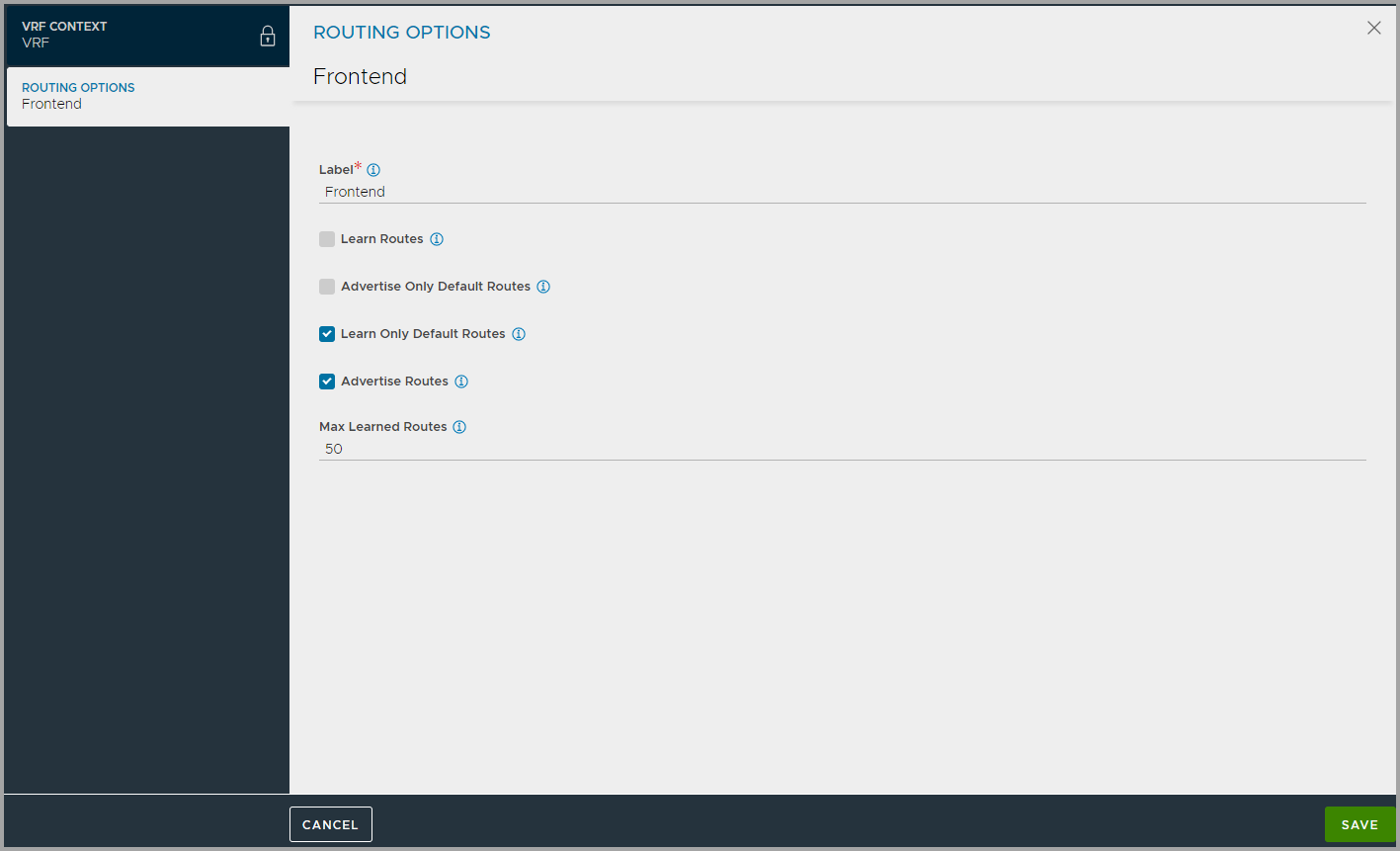

- Under Routing Options click Add to configure learning and advertising options for BGP Peers.

Enter the label and check the required route checkboxes.

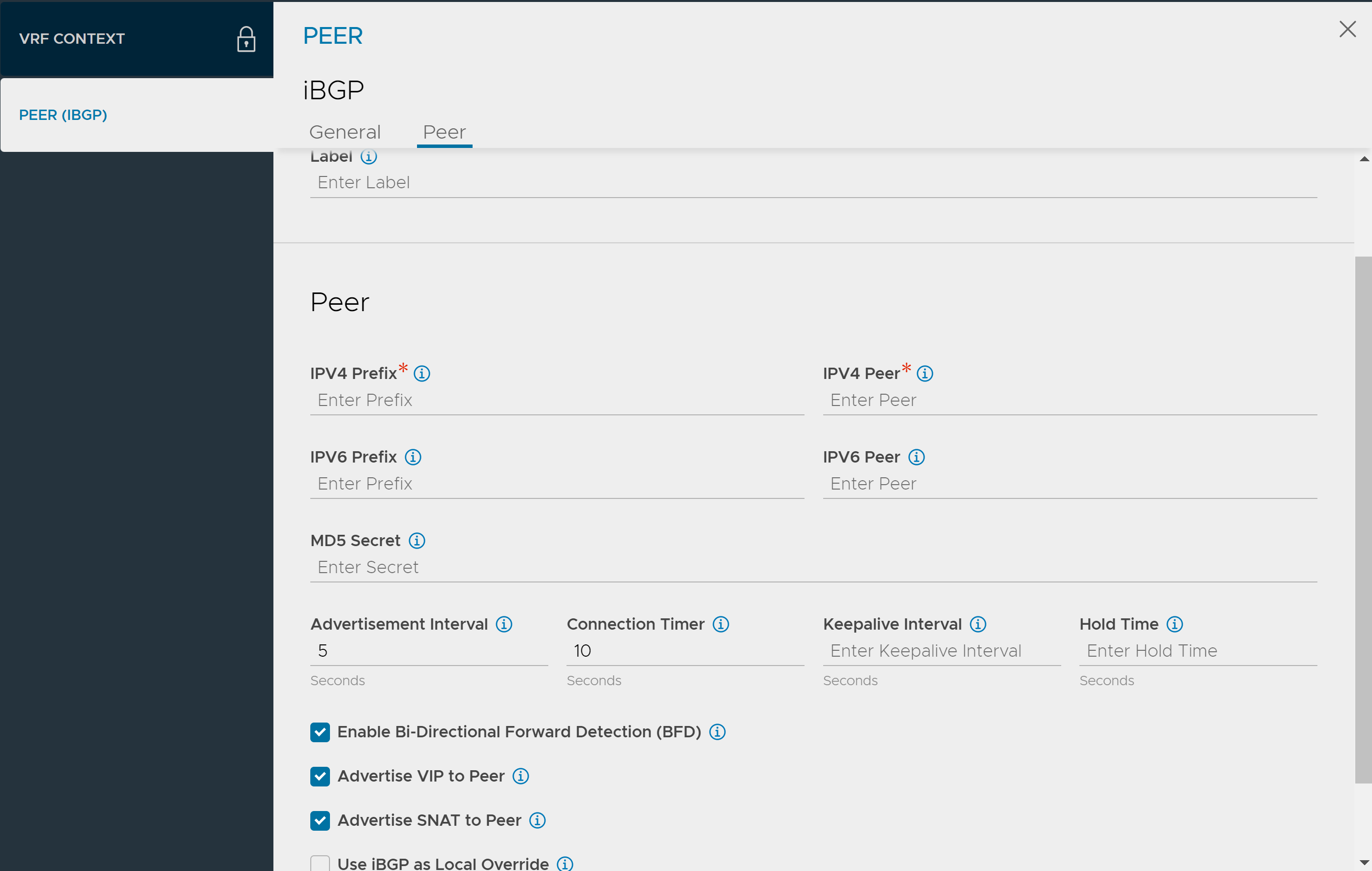

Enter the label and check the required route checkboxes. - Under Peers, click Add and configure the details as shown below:

- Enter IPV4, IPV6 prefix and peer details.

- Enter peer autonomous system Md5 digest secret key.

- Enter the intervals and timer details for peer.

- Check the relevant peer checkbox.

- Enter IPV4, IPV6 prefix and peer details.

-

Click Add under Community Strings and enter either in

aa:nnformat where aa, nn is within [1,65535] orlocal-AS|no-advertise|no-export|internetformat. - Click Save.

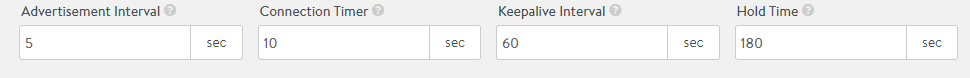

Enabling BGP Timers

BGP timers — Advertisement Interval, Connection Timer, Keepalive Interval, and Hold Time can be configured using NSX Advanced Load Balancer UI as well.

Navigate to Infrastructure > Routing, and select BGP Peering. Enter the desired values for the timers as shown below.

CLI

The following commands configure the BGP profile. The BGP profile is included under NSX Advanced Load Balancer’s virtual routing and forwarding (VRF) settings. BGP configuration is tenant-specific and the profile. Accordingly, sub-options appear in a suitable tenant vrfcontext.

: > configure vrfcontext management

Multiple objects found for this query.

[0]: vrfcontext-52d6cf4f-55fa-4f32-b774-9ed53f736902#management in tenant admin, Cloud AWS-Cloud

[1]: vrfcontext-9ff610a4-98fa-4798-8ad9-498174fef333#management in tenant admin, Cloud Default-Cloud

Select one: 1

Updating an existing object. Currently, the object is:

+----------------+-------------------------------------------------+

| Field | Value |

+----------------+-------------------------------------------------+

| uuid | vrfcontext-9ff610a4-98fa-4798-8ad9-498174fef333 |

| name | management |

| system_default | True |

| tenant_ref | admin |

| cloud_ref | Default-Cloud |

+----------------+-------------------------------------------------+

: vrfcontext > bgp_profile

: vrfcontext:bgp_profile > local_as 100

: vrfcontext:bgp_profile > ibgp

: vrfcontext:bgp_profile > peers peer_ip 10.115.0.1 subnet 10.115.0.0/16 md5_secret abcd

: vrfcontext:bgp_profile:peers > save

: vrfcontext:bgp_profile > save

: vrfcontext > save

: >This profile enables iBGP with peer BGP router 10.115.0.1/16 in local AS 100. The BGP connection is secured using MD5 with shared secret “abcd.”

The following commands enable RHI for a virtual service (vs-1):

: > configure virtualservice vs-1

: virtualservice > enable_rhi

: virtualservice > save

: >The following commands enable RHI for a source-NAT’ed floating IP address for a virtual service (vs-1):

: > configure virtualservice vs-1

: virtualservice > enable_rhi_snat

: virtualservice > save

: >The following command can be used to view the virtual service’s configuration.

: > show virtualserviceTwo configuration knobs have been added to configure per-peer “advertisement-interval” and “connect” timer in Quagga BGP:

advertisement_interval:Minimum time between advertisement runs, default = 5 seconds

connect_timer:Time due for connect timer, default = 10 seconds

Usage is illustrated in this CLI sequence:

[admin:controller]:> configure vrfcontext management

Multiple objects found for this query.

[0]: vrfcontext-52d6cf4f-55fa-4f32-b774-9ed53f736902#management in tenant admin, Cloud AWS-Cloud

[1]: vrfcontext-9ff610a4-98fa-4798-8ad9-498174fef333#management in tenant admin, Cloud Default-Cloud

Select one: 1

Updating an existing object. Currently, the object is:

+----------------+-------------------------------------------------+

| Field | Value |

+----------------+-------------------------------------------------+

| uuid | vrfcontext-9ff610a4-98fa-4798-8ad9-498174fef333 |

| name | management |

| system_default | True |

| tenant_ref | admin |

| cloud_ref | Default-Cloud |

+----------------+-------------------------------------------------+

[admin:controller]: vrfcontext> bgp_profile

[admin:controller]: vrfcontext:bgp_profile> peers

New object being created

[admin:controller]: vrfcontext:bgp_profile:peers> advertisement_interval 10

Overwriting the previously entered value for advertisement_interval

[admin:controller]: vrfcontext:bgp_profile:peers> connect_timer 20

Overwriting the previously entered value for connect_timer

[admin:controller]: vrfcontext:bgp_profile:peers> save

[admin:controller]: vrfcontext:bgp_profile> save

[admin:controller]: vrfcontext> saveConfiguration knobs have been added to configure the keepalive interval and hold timer on a global and per-peer basis:

[admin:controller]: > configure vrfcontext global

[admin: controller]: vrfcontext> bgp_profileOverwriting the previously entered value for keepalive_interval:

[admin: controller]: vrfcontext:bgp_profile> keepalive_interval 30Overwriting the previously entered value for hold_time:

[admin: controller]: vrfcontext:bgp_profile> hold_time 90

[admin: controller]: vrfcontext:bgp_profile> save

[admin:controller]: vrfcontext> save

[admin:controller]:>The above commands configure the keepalive/hold timers on global basis, but those values can be overridden for a given peer using following per-peer commands. Both the global and per peer knobs have default values of 60 seconds for the keepalive timer and 180 seconds for the hold timer.

[admin:controller]: > configure vrfcontext global

[admin: controller]: vrfcontext> bgp_profile

[admin: controller]: vrfcontext:bgp_profile> peers index 1Overwriting the previously entered value for keepalive_interval:

[admin: controller]: vrfcontext:bgp_profile:peers> keepalive_interval 10Overwriting the previously entered value for hold_time:

[admin: controller]: vrfcontext:bgp_profile:peers> hold_time 30

[admin:controller]: vrfcontext:bgp_profile:peers> save

[admin:controller]: vrfcontext:bgp_profile> save

[admin:controller]: vrfcontext> saveExample

The following is an example of router configuration when the BGP peer is FRR:

You need to find the interface information of the SE which is peering with the router.

[admin-ctlr1]: > show serviceengine 10.79.170.52 interface summary | grep ip_addr

| ip_addr | fe80:1::250:56ff:fe91:1bed |

| ip_addr | 10.64.59.48 |

| ip_addr | fe80:2::250:56ff:fe91:b2 |

| ip_addr | 10.115.10.45 | Here 10.115.10.45 matches the subnet in the peer configuration in vrfcontext->bgp_profile object.

In the FRR router, the CLI is as follows:

# vtysh

Hello, this is FRRouting (version 7.2.1).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

frr1# configure t

frr1(config)# router bgp 100

frr1(config-router)# neighbor 10.115.10.45 remote-as 100

frr1(config-router)# neighbor 10.115.10.45 password abcd

frr1(config-router)# end

frr1#You need to perform this for all the SEs that will be peering.

‘show serviceengine < > route’ Filter

The following is the CLI command to use show serviceengine <SE_ip> route:

[admin:controller]: > show serviceengine 10.19.100.1 route filter

configured_routes Show routes configured using controller

dynamic_routes Show routes learned through routing protocols

host_routes Show routes learned from host

vrf_ref Only this Vrf

Note: If no VRF is provided in the filters, then the command output would show routes from global vrf which is present in the system, by default.

Enable Gratuitous ARP

You can enable gratuitous ARP for the virtual service allocated via BGP. This feature is enabled at the Service Engine group level as shown below:

[admin:controller]: > configure serviceenginegroup se_group_test

[admin:controller]: serviceenginegroup> enable_gratarp_permanent

The BFD parameters are user-configurable via the CLI. For more information, refer to the Configuring High Frequency BFD article.

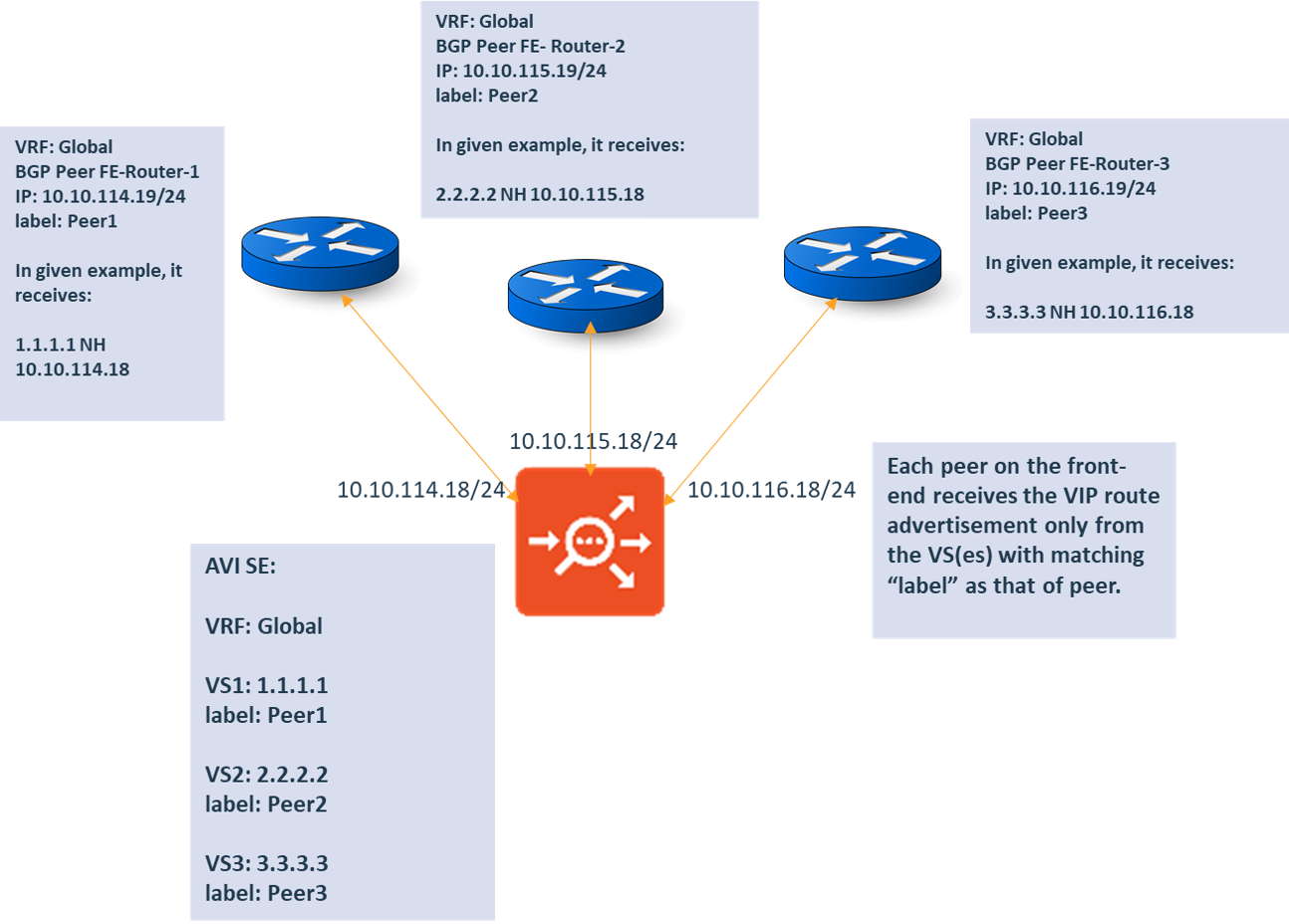

Selective VIP Advertisement

A BGP based virtual service implies that the VIPs are advertised via BGP. All the peers for which the field advertise VIP is enabled, the corresponding VIPs are advertised.

You can specify the VIPs to be advertised using labels. When configuring the VSVIP, you can define that all the peers with a specific label should have a specific VIP advertised. Each peer on the frontend receives the VIP route advertisement only from the virtual services if the label matches that of the peer.

Consider the example where,

- One SE is connected to three frontend routers, FE-Router-1, FE-Router-2, FE-Router-3.

- FE-Router-1, FE-Router-2, and FE-Router-3 have labels Peer1, Peer2, and Peer3 respectively.

- There are three virtual services in the Global VRF: VS1, VS2, and VS3.

- VS1 (1.1.1.1) is configured with label Peer1. This implies, that the virtual service will be advertised to Peer1.

- Similarly, VS2 will be advertised to Peer2 and VS 3 to Peer3, as defined by the labels.

Whenever BGP is enabled for a virtual service, the VIP will be advertised to all the frontend routers. However, in this case, the VIP will be advertised to the selected peer only.

To implement this, the labels list bgp_peer_labels is introduced in the VSVIP object configuration.

VsVip.bgp_peer_labels is a list of unique strings (with maximum of 128 strings).

The length of each string can be a maximum of 128 characters. A label can consist of upper and lower case alphabets, numbers, underscores, and hyphens.

Notes:

- If the VSVIP does not have any label, it will be advertised to all BGP peers with

advertise_vipset to True. - If the VSVIP has the

bgp_peer_labels, then the peer with the field advertise_vip set to True and the label matching thebgp_peer_labelswill receive VIP advertisement. However, if the BGP peer configuration either has no label or if the label does not match, then the peer will not receive the VIP advertisement.

Configuring BGP Peer Labels

Consider an example where VS1 is a BGP-virtual service with a VSVIP vs1-vsvsip.

Global VRF has one peer without any labels.

To enable selective VIP advertisement, add label Peer1 for the Peer, and add the Peer 1 label in VsVip.bgp_peer_labels.

Configuring VRF with BGP Peers

To configure BGP peer labels, from the NSX Advanced Load Balancer UI,

- Navigate to Infrastructure > Cloud Resources > Routing. Click Create.

- Check Enable BGP Peering box to specify BGP related details, such as,

- BGP Autonomous System ID

- BGP Peer Type

- Keepalive intervals for peers

- Hold time for peers

- Local preference to be used for routes advertised

- Number of times the local AS should be perpended

- Select the Label used for advertisement of routes to this peer

- Select the Placement Network

- Enable the option Advertise VIP to Peer

- Click Save

For more details on VRF Context, refer VRF Context in NSX Advanced Load Balancer guide.

Alternatively, BGP peer can be configured using the CLI as shown below:

configure vrfcontext global

Updating an existing object. Currently, the object is:

+----------------------------+--------------------------------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------------------------------+

| uuid | vrfcontext-a1c097dd-f58e-45ca-b90a-6de72a4fd19d |

| name | global |

| bgp_profile | |

| local_as | 65000 |

| ibgp | True |

| peers[1] | |

| remote_as | 65000 |

| peer_ip | 10.10.114.19/24 |

| subnet | 10.10.114.0/24 |

| bfd | True |

| network_ref | vxw-dvs-34-virtualwire-15-sid-1060014-blr-01-vc06-avi-dev010 |

| advertise_vip | True |

| advertise_snat_ip | True |

| advertisement_interval | 5 |

| connect_timer | 10 |

| ebgp_multihop | 0 |

| shutdown | False |

| keepalive_interval | 60 |

| hold_time | 180 |

| send_community | True |

| shutdown | False |

| system_default | True |

| lldp_enable | True |

| tenant_ref | admin |

| cloud_ref | Default-Cloud |

+----------------------------+--------------------------------------------------------------+

[admin:]: vrfcontext> bgp_profile

[admin:]: vrfcontext:bgp_profile> peers index 1

[admin:]: vrfcontext:bgp_profile:peers> label Peer1

[admin:]: vrfcontext:bgp_profile:peers> save

[admin:]: vrfcontext:bgp_profile> save

[admin:]: vrfcontext> save

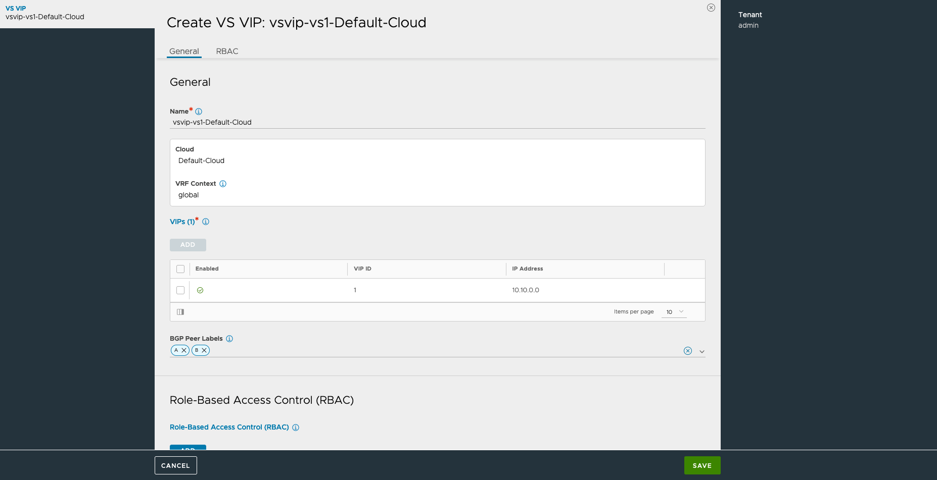

Configuring VSVIP 1

From the VS VIP creation screen of the NSX Advanced Load Balancer, add BGP peer labels, as required:

Alternatively, BGP Peer labels can be configured in the VSVIP using the CLI as shown below:

configure vsvip vs1-vsvip

Updating an existing object. Currently, the object is:

+-----------------------------+--------------------------------------------+

| Field | Value |

+-----------------------------+--------------------------------------------+

| uuid | vsvip-0cab1bbb-d474-4365-8ba4-9d6a3f0add34 |

| name | vs1-vsvip |

| vip[1] | |

| vip_id | 0 |

| ip_address | 1.1.1.1 |

| enabled | True |

| auto_allocate_ip | False |

| auto_allocate_floating_ip | False |

| avi_allocated_vip | False |

| avi_allocated_fip | False |

| auto_allocate_ip_type | V4_ONLY |

| prefix_length | 32 |

| vrf_context_ref | global |

| east_west_placement | False |

| tenant_ref | admin |

| cloud_ref | Default-Cloud |

+-----------------------------+--------------------------------------------+

[admin:]: vsvip> bgp_peer_labels Peer1

[admin:]: vsvip> save

Caveats

- This feature is only applicable to BGP-virtual services. For virtual services that does not use BGP, the field

bgp_peer_labelscannot be enabled. - When selective VIP advertisement is configured, the option

use_vip_as_snatcan not be enabled.

BGP Label-Based Virtual Service Placement

Prior to NSX Advanced Load Balancer version 21.1.4, the placement of BGP virtual services is limited to a maximum of four distinct peer networks and was not label-aware. In the event of more than four distinct peer networks, the Controller chose any four networks randomly out of the same.

Starting with NSX Advanced Load Balancer version 21.1.4, the virtual service placement is done based on the BGP peer label configuration.

Use Case 1

A virtual service VIP with a label X, for example, can only be placed on SE’s having BGP peering with peers containing label X.

| VRF | BGP Peer Labels | VSVIP BGP Peer Lables |

|---|---|---|

| Network 1 | Label 1 | Label 1 |

| Network 2 | Label 2 | Label 2 |

| Network 3 | Label 3 | Label 3 |

| Network 4 | Label 4 | Label 4 |

| Network 5 | Label 5 |

In this case, the virtual service is placed on Network 1, Network 2, Network 3, and Network 4.

Use Case 2

A virtual service VIP with no labels can be placed on SE’s having BGP peering with peers in any subnet. That is, if the BGP peer has labels but BGP virtual service VIP does not have a label, the virtual service VIP is advertised to be placed on all peer NICs (maximum of four distinct peer networks).

| VRF | BGP Peer Labels | VSVIP BGP Peer Lables |

|---|---|---|

| Network 1 | Label 1 | |

| Network 2 | Label 2 | |

| Network 3 | Label 3 | |

| Network 4 | Label 4 | |

| Network 5 | Label 5 |

In this case, the virtual service is randomly placed on any one of the four networks.

Use Case 3

If the virtual service VIP is updated to associate a label later, the SE receives the virtual service SE_List update, the VIP is withdrawn from all the other peers and is placed only on the NIC pertaining to the peer with the matching label (disruptive update).

For example, the initial configuration is as below:

| VRF | BGP Peer Labels | VSVIP BGP Peer Lables |

|---|---|---|

| Network 1 | Label 1 | Label 1 |

| Network 2 | Label 2 | Label 2 |

| Network 3 | Label 3 | Label 3 |

| Network 4 | Label 4 | |

| Network 5 | Label 5 |

Note: Updates done on the VS VIP to associate the labels will lead to disruptive update of the virtual service.

The updated configuration is as shown below:

| VRF | BGP Peer Labels | VSVIP BGP Peer Lables |

|---|---|---|

| Network 1 | Label 1 | Label 1 |

| Network 2 | Label 2 | Label 2 |

| Network 3 | Label 3 | Label 3 |

| Network 4 | Label 4 | Label 4 |

| Network 5 | Label 5 |

Use Case 4

If the label is removed from the virtual service and the virtual service VIP is left with no label, then the virtual service VIP is placed on all the peer - NICs (maximum of four distinct peer networks).

Use Case 5

If the virtual service VIP is created with labels for which there is no matching peer, VS VIP creation is blocked due to invalid configuration, whether it is at the time of creating the virtual service or if the virtual service VIP label is updated later.

| VRF | BGP Peer Labels | VSVIP BGP Peer Lables |

|---|---|---|

| Network 1 | Label 1 | Label 5 |

| Network 2 | Label 2 | |

| Network 3 | Label 3 | |

| Network 4 | Label 4 |

In this case, since there are no matching VS VIP BGP-peer labels, VS VIP creation is blocked with the error No BGP Peer exists with matching labels.