Kubernetes Networking Definition

Kubernetes, sometimes called K8s, is an open-source container orchestration platform. It is used to automate and manage the deployment, maintenance, monitoring, operation, and scheduling of application containers across a cluster of machines, either in the cloud or on-premises.

Kubernetes allows teams to manage containerized workloads across multiple environments, infrastructures, and operating systems. It is supported by all major container management platforms such as AWS EKS, Docker EE, IBM Cloud, OpenShift, and Rancher. Core Kubernetes themes to understand before discussing Kubernetes networking include:

- Primary node. The primary node manages the Kubernetes cluster’s worker nodes and controls pod deployment.

- Worker node. Worker nodes are servers that generally run Kubernetes components such as application containers and proxies in pods.

- Service. A service is an abstraction with a stable IP address and ports that functions as a proxy or internal load balancer for requests across pods.

- Pod. The pod is the basic deployment object in Kubernetes, each with its own IP address. A pod can contain one or multiple containers.

- Other Kubernetes components. Additional important Kubernetes system components include the Kubelet, the API Server, and the etcd.

Kubernetes networking was developed by Google. Administrators use Kubernetes cluster networking to move workloads across public, private, and hybrid cloud infrastructures. Developers package software applications and infrastructure required to run them together using Kubernetes and deploy new versions of software more quickly with Kubernetes.

Kubernetes networking enables communication between Kubernetes components and between components and other applications. Because its flat network structure eliminates the need to map ports between containers, the Kubernetes platform provides a unique way to share virtual machines between applications and run distributed systems without dynamically allocating ports.

There are several essential things to understand about Kubernetes networking:

- Container-to-container networking: containers in the same pod communicating

- Pod-to-pod networking, both same node and across nodes

- Pod-to-service networking: pods and services communicating

- DNS and internet-to-service networking: discovering IP addresses.

What is Kubernetes Networking?

Networking is critical to Kubernetes, but it is complex. There are several Kubernetes networking basics for any Kubernetes networking implementation:

all Pods must be able to communicate with all other Pods without NAT (network address translation).

all Nodes and Pods can communicate without NAT.

Pods and others all see the same IP address that a Pod sees for itself.

These requirements leave a few distinct Kubernetes networking problems, and divide communication into several Kubernetes network types.

Container-to-container networking: communication between containers in the same pod

How do two containers running in the same pod talk to each other? This happens the same way multiple servers run on one device: via localhost and port numbers. This is possible because containers in the same pod share networking resources, IP address, and port space; in other words, they are in the same network namespace.

This network namespace, a collection of routing tables and Kubernetes network interfaces, contains connections between network equipment and routing instructions for network packets. Kubernetes assigns running processes to the root network namespace by default, allowing them external IP address access.

Network namespaces enable users to run the same virtual machine (VM) with many network namespaces without interference or collisions.

Pod-to-pod communication

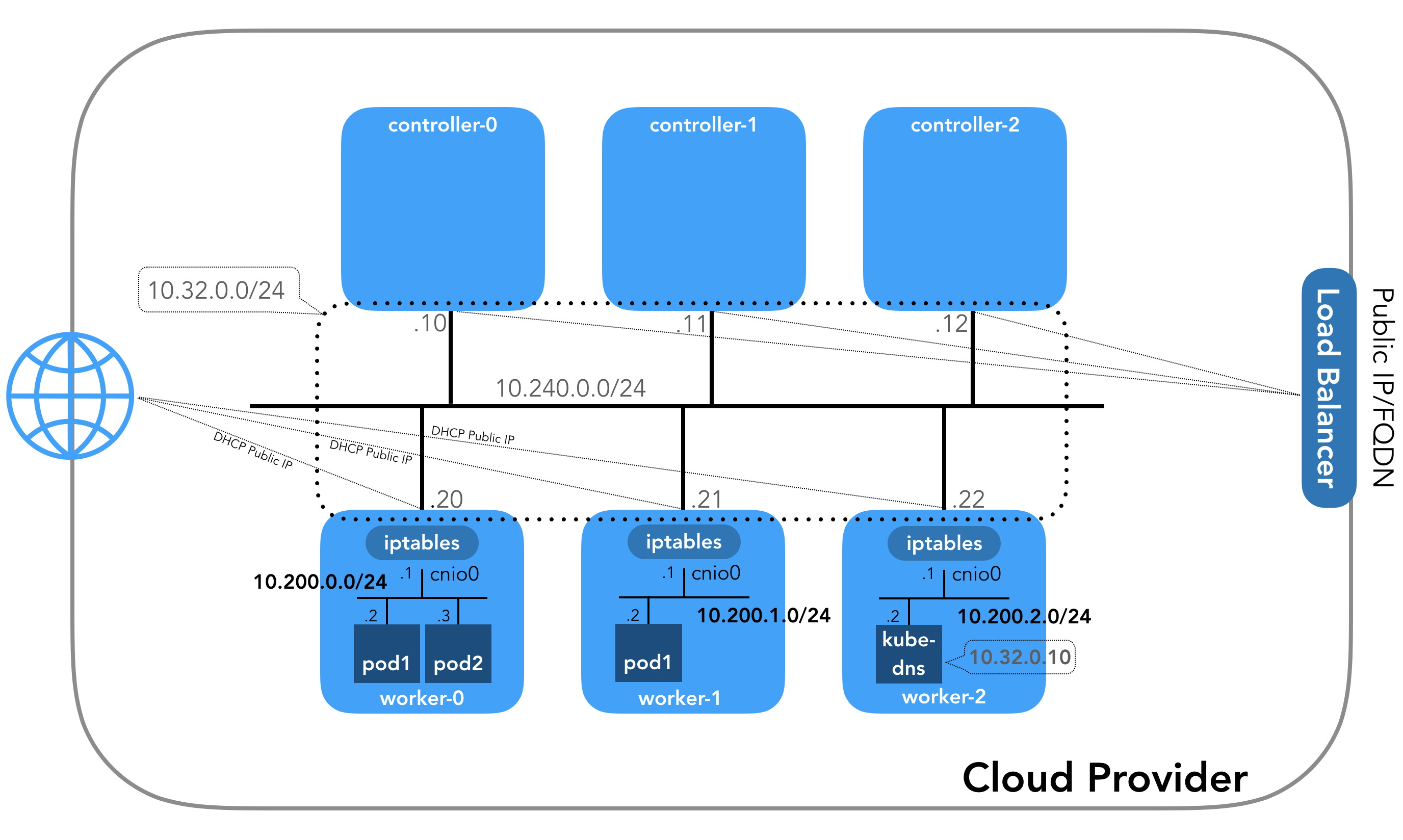

Pod-to-pod networking can occur for pods within the same node or across nodes. Each node has a classless inter-domain routing (CIDR) block, a tool used for routing traffic and allocating IP addresses. The CIDR block contains a defined set of unique IP addresses that are assigned to the node’s pods. This is intended to ensure that, regardless of which node it is in, each pod has a unique IP address.

Pods connect to communicate using a virtual ethernet device (VED) or virtual ethernet device pair (veth pair). Always created in interconnected pairs, these coupled Kubernetes container network interfaces span the root and pod namespaces, bridging the gap and acting as an intermediary connection between devices.

The requests get between pods in the node using a network bridge which connects the two networks. When it receives a request, the bridge confirms that all connected pods can handle the request by confirming that they have the correct IP address. If one of the pods does have the correct IP address, the network request will be completed as the bridge forwards the data and stores the information.

Every pod on a Kubernetes node is part of the bridge, which is called cbr0. The bridge connects all of the node’s pods.

Pod-to-service networking: communication between pods and services

Pod IP addresses are fungible by design so they can be replaced as needed dynamically. These IP addresses disappear or appear in response to application crashes, scaling up or down, or node reboots.

Therefore, unless a team creates a stateful application or takes other special precautions, pod IP addresses are not durable. Because any number of events can cause unexpected changes to the pod IP address, Kubernetes uses built in services to deal with this and ensure seamless communication between pods.

Kubernetes services as part of a Kubernetes service network manage pod states over time, allowing the tracking of pod IP addresses. By assigning a cluster IP address—a single virtual IP address—to a group of pod IPs, these services abstract pod IP addresses. This allows them to first send traffic to the virtual IP address and then distribute it to an associated grouping of pods.

The virtual IP enables Kubernetes services to load balance in-cluster and distribute traffic smoothly among pods and enables pods to be destroyed and created on demand without impacting communications overall.

This pods to service process happens via a small process running inside each Kubernetes node: the kube proxy. The kube proxy process maps the virtual service IP addresses to the actual pods IP addresses so requests may proceed.

DNS and internet-to-service networking: discovering IP addresses

DNS is the system Kubernetes uses to convert domain names to IP addresses, and each Kubernetes cluster has a DNS resolution service. Every cluster’s service has an assigned domain name, and pods have a DNS name automatically as well. Pods can also specify their own DNS name.

When a request via the service domain name is made, the DNS service resolves it to the service IPs address. Next, the kube proxy converts the service IPs address into a pod IP address. Then the request follows a given path, depending on whether the destination pod is on the same node or on a different node.

Finally, most deployments require internet connectivity, enabling collaboration between distributed teams and services. To enable external access in Kubernetes and control traffic into and out of your network, you must set up egress and ingress policies with either allowlisting or denylisting.

Egress routes traffic from the node to an outside connection. Typically attached to a virtual private cloud (VPC), this is often achieved using an internet gateway that maps IPs between the host machine and the users with network address translation (NAT). Kubernetes uses cluster-IPs and IP tables to finalize communications, however, as it cannot map to the individual pods on the node.

Kubernetes networking ingress—getting traffic into the cluster—is a tricky challenge that involves communications to Kubernetes services from external clients. Ingress allows and blocks particular communications with services, operating as a defined set of rules for connections. Typically, there are two ingress solutions that function on different network stack regions: the service load balancer and the ingress controller.

You can specify a load balancer to go with a Kubernetes service you create. Implement the Kubernetes network load balancer using a compatible cloud controller for your service. After you create your service, the IP address for the load balancer will be what faces the public and other users, and you can direct traffic to to begin communicating with your service through that load balancer.

Packets that flow through an ingress controller have a very similar trajectory. However, the initial connection between node and ingress controller is via the exposed port on the node for each service. Also, the ingress controller can route traffic to services based on their path.

Kubernetes Network Policy

Kubernetes network policies enable the control of traffic flow for particular cluster applications at the IP address and port levels (OSI layers 3 and 4). An application-centric construct, Kubernetes network policies allow users to specify how pods and various network entities such as services and endpoints are permitted to communicate over the network.

A combination of the following 3 identifiers clarify how entities and pods can communicate:

- A list of other allowed pods, although a pod cannot block access to itself;

- A list of allowed namespaces; or

- Via IP blocks that work like exceptions: traffic to and from the pod’s node is always allowed, regardless of the pod or node’s IP address.

Network policies defined by pods or namespaces are based on a selector that specifies what traffic is allowed to and from the pod(s) in question. Any traffic that matches the selector is permitted.

CIDR ranges define network policies based on IP blocks.

Implement network policies using network plugins designed to support the policies.

Pods are non-isolated by default, meaning without having a Kubernetes network policy applied to them, they accept traffic from any source. Once a network policy in a namespace selects a particular pod, that pod becomes isolated and will reject any connections that the policy does not allow even as other unselected pods in the namespace continue to accept all traffic.

Kubernetes network policies are additive. If any policies select and restrict a pod, it is subject to those combined ingress/egress rules of all policies that apply to it. The policy result is unaffected by order of evaluation.

For a complete definition of the resource, see the NetworkPolicy reference.

Common Kubernetes Pod Network Implementations

There many ways to implement a Kubernetes network model and many third-party tools to choose from, but these are a few popular Kubernetes network providers to choose from:

Flannel, an open-source Kubernetes network fabric, uses etcd to store configuration data, allocates subnet leases to hosts, and operates through a binary agent on each host.

Project Calico or just Calico, an open-source policy engine and networking provider, enables a scalable networking solution with enforced Kubernetes security policies on your service mesh layers or host networking.

Canal is a term based on a defunct project for an integration of the Flannel networking layer and the Calico networking Kubernetes policy capabilities. Canal offers the simple Flannel overlay and the powerful security of Calico for an easier way to enhance security on the network layer.

Weave Net, a proprietary toolkit for networking based on a decentralized architecture, includes virtual networking features for scalability, resilience, multicast networking, security, and service discovery. It also doesn’t require any external storage or configuration services.

Kubernetes Networking Challenges

As organizations bring Kubernetes into production, security, infrastructure integration, and other major bottlenecks to deployment remain. Here are some of the most common Kubernetes container networking challenges.

Differences from traditional networking. Since container migration is more dynamic by nature, so is Kubernetes network addressing. Classic DHCP can become a hindrance to speed, and traditional ports and hard-coded addresses can render securing the network a problem. Furthermore, particularly for large-scale deployments, there are likely to be too many addresses to assign and manage, and IPv4 limitations for hosts inside one subnet.

Security. Network security is always a challenge, and it is essential to specifically define Kubernetes network policies to minimize risk. Managed solutions, cloud-native services, and platforms help render Kubernetes deployment networks more secure and ensure flawed applications in Kubernetes managed containers are not overlooked. For example, there is no default Kubernetes network encryption for traffic, so the right service mesh can help achieve a Kubernetes encrypted network.

Dynamic network policies. The need to define and change Kubernetes network policies for a pod or container requires the creation of NetworkPolicy resources. This in essence demands creating configuration files for many pods and containers, and then modifying them in a process that can be tedious, difficult, and prone to error. Kubernetes networking solutions such as Calico can allow you to abstract vendor specific network APIs and implementations and change the policy more easily based on application requirements.

Abstraction. Kubernetes adoption can involve data center design or redesign, restructuring, and implementation. Existing data centers must be modified to suit container-based Kubernetes network architectures. Use of a cloud-native, software-defined environment suitable for both non-container and container-based applications is one solution to this issue, allowing you to define container traffic management, security policies, load balancing, and other network challenges through REST APIs. Such an environment also provides an automation layer for DevOps integration, rendering Kubernetes-based applications more portable across cloud providers and data centers.

Complexity. Particularly at scale, complexity remains a challenge for Kubernetes and any container-based networking. Kubernetes service mesh solutions for cloud native and container-based applications don’t require code changes and offer features such as routing, service discovery, visibility to applications, and failure handling.

Reliability of communication. As the number of pods, containers, and services increases, so does the importance—and challenge—of service-to-service communication and reliability. Harden Kubernetes network policies and other configurations to ensure reliable communications, and manage and monitor them on an ongoing basis.

Connectivity and debugging. As with any other network technology, Kubernetes network performance can vary, and Kubernetes networks can only succeed with the right tools for monitoring and debugging. Kubernetes network latency is its own challenge and network stalls may require debugging.

Does VMware NSX Advanced Load Balancer Offer Kubernetes Networking Monitoring and Application Services?

Yes. VMware NSX Advanced Load Balancer provides a centrally orchestrated, elastic proxy services fabric with dynamic load balancing, service discovery, security, and analytics for containerized applications running in Kubernetes environments.

Kubernetes adoption demands a cloud-native approach for traffic management and application networking services, which VMware NSX Advanced Load Balancer provides. The VMware NSX Advanced Load Balancer delivers scalable, enterprise-class container ingress to deploy and manage container-based applications in production environments accessing Kubernetes clusters.

VMware NSX Advanced Load Balancer provides a container services fabric with a centralized control plane and distributed proxies:

Controller: A central control, management and analytics plane that communicates with the Kubernetes primary node. The Controller includes two sub-components called Kubernetes Operator (AKO) and Multi-Cloud Kubernetes Operator (AMKO), which orchestrate all interactions with the Kube-controller-manager. AKO is used for ingress services in each Kubenetes cluster and AMKO is used in the context of multiple clusters, sites, or across clouds The Controller deploys and manages the lifecycle of data plane proxies, configures services and aggregates telemetry analytics from the Service Engines.

Service Engine: A service proxy providing ingress services such as load balancing, WAF, GSLB, IPAM/DNS in the dataplane and reporting real-time telemetry analytics to the Controller.

For more on the actual implementation of load balancing, security applications and web application firewalls check out our Application Delivery How-To Videos.

Find out more about how VMware NSX Advanced Load Balancer’s cloud-native approach for traffic management and application networking services can assist your organization’s Kubernetes adoption here.