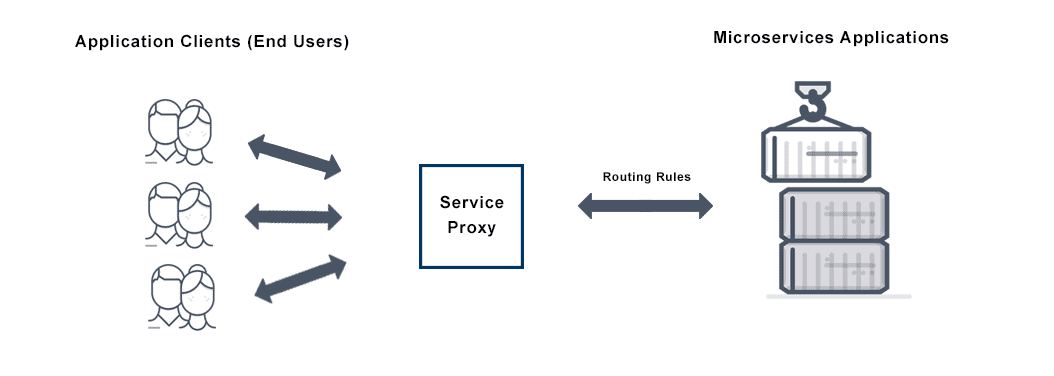

Service Proxy

Service proxy is a client-side proxy for microservices applications that allows the application components to send and receive traffic. The main job of a service proxy is to ensure traffic is routed to the right destination service or container and to apply security policies. Service proxies also help to ensure that application performance needs are met.

What is a Service Proxy?

A service proxy is a network component that acts as an intermediary for requests seeking resources from microservices application components. A client connects to the service proxy to request a particular service (file, connection, web page, or other resources) provided by one of the microservices components. The service proxy evaluates the request to route the request appropriately based on the load balancing algorithm configured.

What is a Web Service Proxy?

A web service proxy is a gatekeeper between a calling application and target web service. The proxy has the ability to introduce new behaviors within the request sequence. A Web Service proxy can then:

• Add or remove HTTP headers.

• Terminate or offload SSL requests

• Perform URL filtering and content switching

• Provide content caching

• Support Blue-Green deployments and Canary testing

How does a Service Proxy Work?

A service proxy works by acting as an intermediary between the client and the server. In a service proxy configuration, there is no direct communication between the client and the server. Service proxies are typically centrally managed and orchestrated. They do The client is connected to the proxy and sends requests for resources (document, web page, a file) located on a remote service. The proxy deals with this request by fetching the required assets from a remote service and forwarding it to the client.

Advantages of Service Proxy?

There are many advantages of service proxies:

1) Granularity: The service proxy is a necessary part of the networking infrastructure for microservices applications. It offers the granularity of application services needed to deliver scalable microservices applications

2) Traffic management: Service proxies can deliver both local and global load balancing services to applications within and across data centers.

3) Service Discovery: With the right architecture, service proxies can provide service discovery by mapping service host/domain names to the correct virtual IP addresses where they can be accessed.

4) Monitoring and Analytics: Service proxies can provide telemetry to help monitor application performance and alert on any application failures or anomalies.

5) Security: Service proxies can be configured to enforce L4-L7 security policies, application rate limiting, web application firewalling, URL filtering and other security services such as micro-segmentation.

Does Avi Offer Service Proxy?

Yes! Avi offers a service proxy for microservices applications as part of the Container Ingress for Kubernetes and OpenShift based applications. Avi service proxies are a distributed fabric of software load balancers that run on individual containers adjacent (as a side car) to the container representing each microservice. The capabilities of Avi’s Container Ingress are fully described in this white paper. Avi’s service proxies include:

• Full-featured load balancer, including advanced L7 policy-based switching, SSL offload, and data plane scripting

• East-west and north-south traffic management

• Health monitoring of microservices with automatic state synchronization

• 100% REST API with automation and self-service

• Centralized policy management and orchestration

For more on the actual implementation of load balancing, security applications and web application firewalls check out our Application Delivery How-To Videos.

For more information on Service Proxy see the following resources: