Ingress Load Balancer Kubernetes Definition

Within Kubernetes or K8s, a collection of routing rules that control how Kubernetes cluster services are accessed by external users is called ingress. Managing ingress in Kubernetes can take one of several approaches.

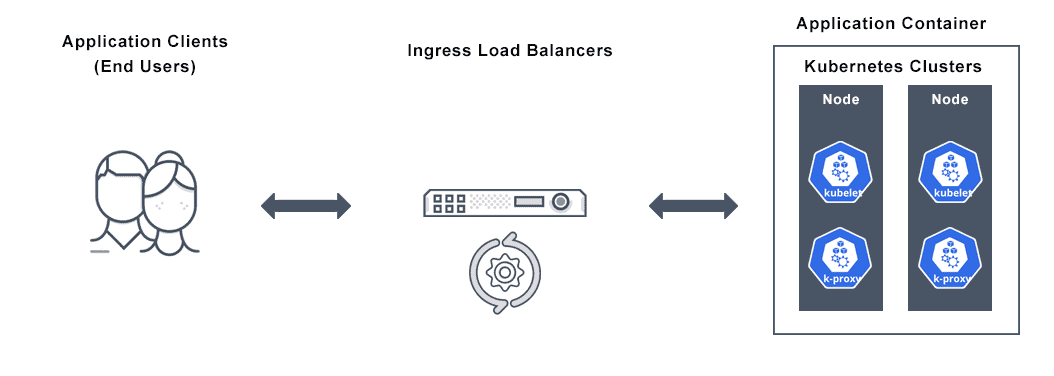

An application can be exposed to external users via a Kubernetes ingress resource; a Kubernetes NodePort service which exposes the application on a port across each node; or using an ingress load balancer for Kubernetes that points to a service in your cluster.

An external load balancer routes external traffic to a Kubernetes service in your cluster and is associated with a specific IP address. Its precise implementation is controlled by which service types the cloud provider supports. Kubernetes deployments on bare metal may require custom load balancer implementations.

However, properly supported ingress load balancing for Kubernetes is the simplest, more secure way to route traffic.

What is Ingress Load Balancing for Kubernetes?

Kubernetes ingress is an API object that manages external access to Kubernetes cluster services, typically HTTP and HTTPS requests. An ingress object may provide multiple services, including SSL termination, load balancing, and name-based virtual hosting.

Although a load balancer for Kubernetes routes traffic, such as http traffic and https traffic, routing is only the most obvious example of ingress load balancing. There are additional important ingress requirements for Kubernetes services. For example:

- authentication

- content-based routing, such as routing based on http request headers, http method, or other specific requests

- support for multiple protocols

- resilience, such as, timeouts, rate limiting

Support for some or all of these capabilities is important for all but the most simple cloud applications. Critically, it may be necessary to manage many of these requirements at the service level—inside Kubernetes.

A load balancer, network load balancer, or load balancer service is the standard method for exposing a service to the internet. All traffic forwards to the service at a single Kubernetes ingress load balancer IP address.

Ingress is a complex yet powerful way to expose services, and there are many types of Ingress controllers, such as Contour, Google Cloud Load Balancer, Istio, and Nginx, as well as plugins for Ingress controllers. Ingress is most useful for exposing multiple services using the same L7 protocol (typically HTTP) under the same external IP address.

Kubernetes Ingress vs Load Balancer

The way that Kubernetes ingress and Kubernetes load balancer service interact can be confusing. However, the function of the K8s service is the most important aspect of the Kubernetes load balancer strategy.

A Kubernetes application load balancer is a type of service, while Kubernetes ingress is a collection of rules, not a service. Instead, Kubernetes ingress sits in front of multiple services and acts as the entry point for an entire cluster of pods. Ingress allows multiple services to be exposed using a single IP address. Those services then all use the same L7 protocol (such as HTTP).

A Kubernetes cluster’s ingress controller monitors ingress resources and updates the configuration of the server side according to the ingress rules. The default ingress controller will spin up a HTTP(s) Load Balancer. This will let you do both path-based and subdomain-based routing to backend services (such as authentication, routing).

A Kubernetes load balancer is the default way to expose a service to the internet. On GKE for example, this will spin up a network load balancer that will give you a single IP address that will forward all traffic to your service. The Kubernetes load balancer service operates at the L4 level, meaning it will direct all the traffic to the service and support a range of traffic types, including TCP, UDP and gRPC. This is different from ingress which operates at the L7 level. Each service exposed by a Kubernetes load balancer will be assigned its own IP address (rather than sharing them like ingress) and require a dedicated load balancer.

A Kubernetes network load balancer points to load balancers which reside external to the cluster—also called Kubernetes external load balancers. They have native capability for working with pods that are externally routable, as AWS and Google do.

Different Kubernetes providers (such as Amazon EKS, GKE, or bare metal) of both ingress and Kubernetes load balancing resources support different features.This means files and configurations may not port between controllers and platforms.

In contrast, with ingress users define a set of rules for the controller to use—and these ingress rules may also be followed by a load balancer service. Ingress rules will not function unless they are mapped to an ingress controller for processing. The NGINX and Amazon ALB controllers are among the most widely-used ingress controllers.

Does the VMware NSX Advanced Load Balancer Offer Kubernetes Ingress Services?

Yes. The VMware NSX Advanced Load Balancer provides container ingress, on-demand application scaling, L4-L7 load balancing, a web application firewall (WAF), real-time application analytics, management of Kubernetes objects, and global server load balancing (GSLB)—all from a single platform. Find out more about the operational simplicity, observability, and cloud-native automation VMware NSX Advanced Load Balancer’s integrated solution for Kubernetes Ingress Services delivers here.

For more on the actual implementation of load balancers, check out our Application Delivery How-To Videos or watch the Kubernetes Ingress and Load Balancer How To Video here: